Multiple Master and Worker Nodes for High Availability

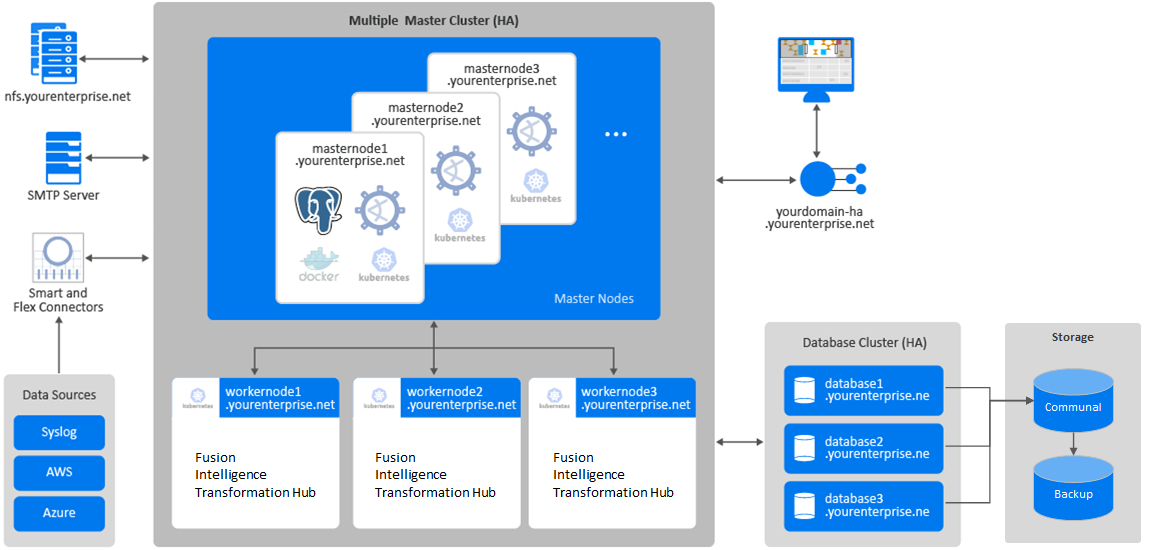

In this scenario, which deploys Intelligence with high availability, you have three master nodes connected to three worker nodes and a database cluster. Each node runs on a separate, dedicated, connected host. All nodes have the same operating system. Each Worker Node processes events, with failover to another Worker Node if a Worker fails. All of these environments require an external server to support NFS. The Kubernetes cluster for Intelligence includes Fusion, which provides ArcSight SOAR, and Transformation Hub.

example-install-config-intelligence-high_availability.yaml config file with the ArcSight Platform Installer. For more information about the yaml files, see Using the Configuration Files in the Administrator's Guide for ArcSight Platform.

Diagram of this Scenario

Figure 2. Example deployment of Intelligence in a high-availability cluster

Characteristics of this Scenario

This scenario has the following characteristics:

- The Kubernetes cluster has three master nodes and three worker nodes, so that it can tolerate a failure of a single master and still maintain master node quorum.

- A FQDN hostname for a virtual IP is used so that clients accessing master nodes have a single reliable hostname to connect to that will shift to whatever is the current primary master node. For example,

yourdomain-ha.yourenterprise.net. - Transformation Hub's Kafka and ZooKeeper are deployed to all worker nodes with data replication enabled (1 original, 1 copy) so that they can tolerate a failure of a single node and still remain operational.

- Intelligence services, as well as Transformation Hub's platform and processing services, are allocated across all worker nodes so that, if one of the nodes fails, Kubernetes can move all of the components to the other node and still remain operational.

- Fusion is allocated to a single worker node.

- For the NFS configuration, use an NFS server that has high availability capabilities so that it is not a single point of failure.

- The database cluster has three nodes with data replication enabled (1 original and 1 copy) so that it can tolerate a failure of a single node and remain operational.

Guidance for Node Configuration

The worker nodes process events, with failover to another worker node in the event of a worker failure. There are no single points of failure. You need a minimum of nine physical or VM environments: three dedicated master nodes, three or more dedicated worker nodes, and a database cluster. You also need a customer-provisioned, highly available NFS server (external NFS).

The following table provides guidance for deploying the capabilities across multiple nodes to support a large workload.

| Node Name | Description | RAM | CPU Cores | Disk Space | Ports |

|---|---|---|---|---|---|

| Master Nodes 1-3

|

OMT Management Portal

|

256 GB | 32 | 5 TB | |

| Database Nodes 1-3

|

Database | 192 GB | 24 | 28 TB | Database |

| Worker 1

|

Intelligence Transformation Hub |

256 GB | 32 | 5 TB | |

| Worker 2

|

Intelligence Transformation Hub |

256 GB | 32 | 5 TB | |

| Worker 3

|

Fusion Intelligence Transformation Hub |

256 GB | 32 | 5 TB |