Introduction

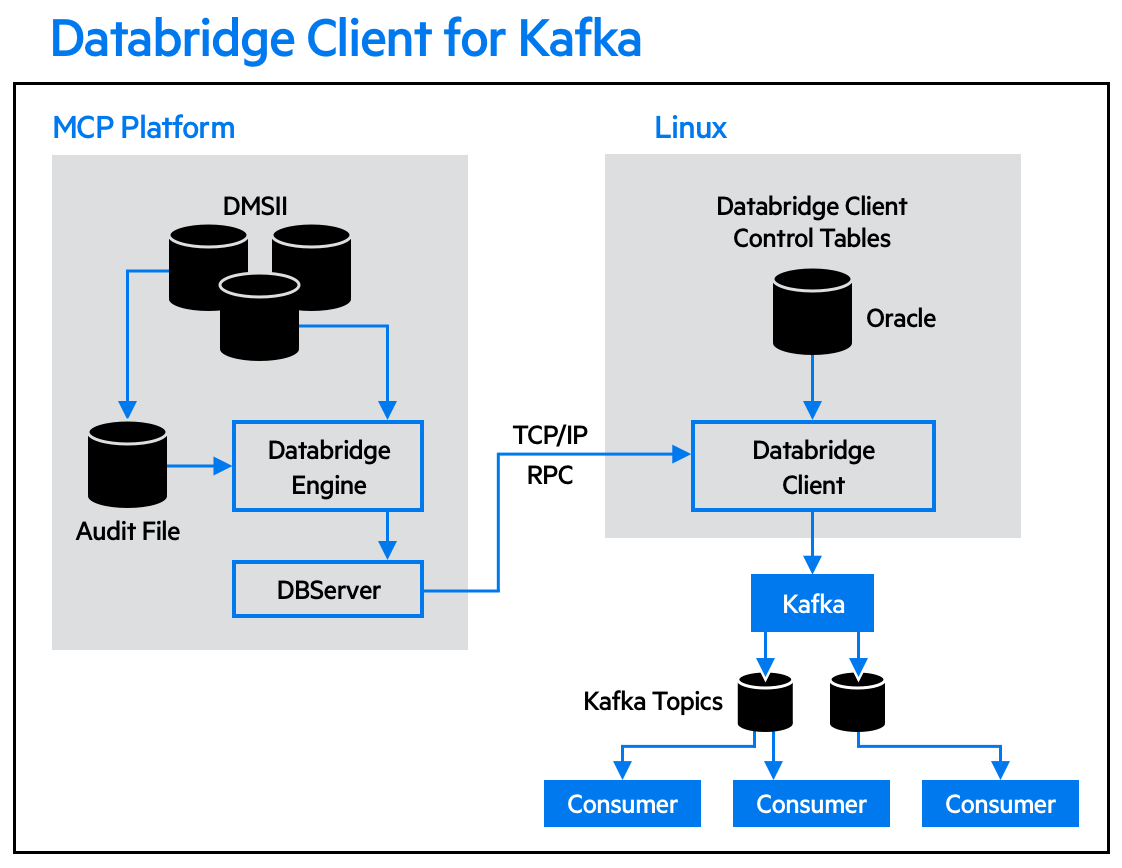

The Databridge Client for Kafka enables the ability to utilize the Kafka messaging system within the Databridge architecture. The Kafka messaging system is a scalable fault-tolerant data management system that provides efficient real-time data processing.

The Databridge Client for Kafka acts as a Kafka Producer. Producers in the Kafka environment publish messages which can be configured to be included in topics dispersed by brokers.

Communication to Kafka can optionally be authenticated using Kerberos and can use SSL/TLS to encrypt the Kafka datastream if desired.

Kafka Overview and Roles

The Databridge Client for Kafka utilizes an open source tool that provides real-time messaging and data processing. The Databridge Client for Kafka acts as a producer, which publishes and feeds data to the brokers to export data to the configured consumers. The specific roles within the Kafka workflow are outlined below.

Brokers

Brokers are servers that provide the means to communicate to Kafka. Brokers manage connections to producers, consumers, topics, and replicas. A broker may be standalone or may consist of a small or large cluster of servers to form a Kafka Cluster.

The brokers that the Databridge Client for Kafka will attempt to use are specified in the client configuration file. The broker(s) identified in the configuration file are considered to be "bootstrap brokers", which are used for establishing the initial connection with Kafka while also obtaining information for the cluster. The full list of participating brokers will be obtained from the broker(s) configured.

Clusters

A Kafka cluster is a group of brokers that have been configured into an identified cluster. Brokers are grouped into clusters through a configuration file as brokers cannot be clustered without being managed.

Consumers

Consumers pull/subscribe to data in Kafka topics through brokers distribution of partitions to a consumer or a group of consumers.

Producers

The Databridge Client for Kafka acts as a producer, which "push/publish" data to brokers in the form of topics which gets exported to the configured consumers.

Topics

Topics store messages (data) that are grouped together by category.

Additionally, each topic consists of one or more partitions. When the Databridge Client for Kafka produces a message for a topic, the partition used for a particular message is based on an internal DMSII value that is unique to the DMSII record. Thus a given DMSII record will always be sent to the same partition within a topic.

The client writes JSON-formatted DMSII data to one or more topics. By

default, each data set will be written to a topic that is uniquely

identified by concatenating the data source name and the data set name,

separated by an underscore (see Example 1-1). The configuration file

parameter default_topiccan be used to indicate that all data sets

will be written to a single topic (see Example 1-2). Each JSON-formatted

record contains the data source and data set name providing information

regarding the source of the DMSII data if needed. Whether or not

default_topic is used, a topic_config.ini file is generated in the

config folder so each data set can be customized if so desired ( see

Example 1-3).

; Topic configuration file for update_level 23

[topics]

backords = TESTDB_backords

employee = TESTDB_employee

customer = TESTDB_customer

orddtail = TESTDB_orddtail

products = TESTDB_products

supplier = TESTDB_supplier

orders = TESTDB_orders

; Topic configuration file for update_level 23

[topics]

backords = TestTopic

employee = TestTopic

customer = TestTopic

orddtail = TestTopic

products = TestTopic

supplier = TestTopic

orders = TestTopic

; Topic configuration file for update_level 23

[topics]

backords = TestTopic

employee = TestEmployee

customer = TestTopic

orddtail = TestTopic

products = TestTopic

supplier = TestSupplier

orders = TestTopic

Additionally, each topic consists of one or more partitions. When the Databridge Client for Kafka produces a message for a topic, the partition used for a particular message is based on an internal DMSII value that is unique to the DMSII record. Thus a given DMSII record will always be sent to the same partition within a topic.

Data Format

The client writes replicated DMSII data to Kafka topics in JSON format. The JSON consists of several name:value pairs that correspond to data source (JSON name:value equivalent is "namespace" : data_source), data set name (JSON " name" : "dmsii_datasetname"), DMSII items (JSON " fields" : "dmsii_itemname"value pairs).

Given the DASDL:

EMPLOYEE DATA SET "EMPLOYEE"

POPULATION = 150

(

EMPLOY-ID NUMBER(10);

LAST-NAME ALPHA(10);

FIRST-NAME ALPHA(15);

EMP-TITLE ALPHA(20);

BIRTHDATE ALPHA(8);

HIRE-DATE ALPHA(8);

ADDRESS ALPHA(40);

CITY ALPHA(15);

REGION ALPHA(10);

ZIP-CODE ALPHA(8);

COUNTRY ALPHA(12);

HOME-PHONE ALPHA(15);

EXTENSION ALPHA(5);

NOTES ALPHA(200);

ALPHAFILLER ALPHA(10);

REPORTS-TO NUMBER(10);

NULL-FIELD ALPHA(10);

CREDB-RCV-DAYS NUMBER(5) OCCURS 12 TIMES;

ITEMH-ADJUSTMENT NUMBER(4) OCCUTS 5 TIMES;

EMPLOY-DATE DATE INITIALVALUE = 19570101;

FILLER SIZE(30);

), EXTENDED=TRUE;

A sample JSON record conforming to the DASDL layout above will be formatted as follows:

{

"namespace":"TESTDB",

"name":"employee",

"fields":

{

"update_type": 0,

"employ_id":111,

"last_name":"Davolio",

"first_name":"Nancy",

"emp_title":"Sales Representative",

"birthdate":"2/24/50",

"hire_date":"6/5/87",

"address":"507 - 20th Ave.E. Apt. 2A",

"city":"Seattle",

"region":"WA",

"zip_code":"76900",

"country":"USA",

"home_phone":"(206)555-9856",

"extension":"5467",

"notes":"Education includes a BA in Psychology from Colorado State University in 1970. She also completed ""The Art of the Cold Call."" Nancy is a member of Toastmasters International.",

"alphafiller":"XXXXXXXXXX",

"reports_to":666,

"null_field":null,

"credb_rcv_days_01":11,

"credb_rcv_days_02":21,

"credb_rcv_days_03":32,

"credb_rcv_days_04":43,

"credb_rcv_days_05":54,

"credb_rcv_days_06":65,

"credb_rcv_days_07":76,

"credb_rcv_days_08":87,

"credb_rcv_days_09":98,

"credb_rcv_days_10":109,

"credb_rcv_days_11":1110,

"credb_rcv_days_12":1211,

"itemh_adjustment_001":1,

"itemh_adjustment_002":10,

"itemh_adjustment_003":100,

"itemh_adjustment_004":1000,

"itemh_adjustment_005":10000,

"employ_date":"19570101000000",

"update_time":"20190308080314",

"audit_ts":"20190308090313"

}

}